Inside the Sausage Factory

23 July, 2019Update 12/2021: There’s a lot that’s changed in the last couple of years since this post was originally written, and it’s long overdue a bit of an update! Pretty much all of the ‘ancient-yet-spritely’ Blades have been replaced with much more modern servers in an effort to increase performance and also reduce power consumption. We’ve also upgraded to 10GbE networking throughout, and best of all we have a shiny new Ceph cluster thanks to the amazing folks at SoftIron! There’ll be a follow-up post soon with some insight into the work we’ve done….

Part of the reason why we chose such a ridiculous name for Sausage Cloud was, although everyone loves a sausage, noone really wants to know where they come from or how they’re made, much less to look inside the sausage factory itself 🙀. This seems apt for someone running infrastructure services.

So in this post I’m going to do exactly that, and gaze at the horrors that lie within when it comes to running a cloud platform on a shoestring budget with blatant disregard for service because hobby project.

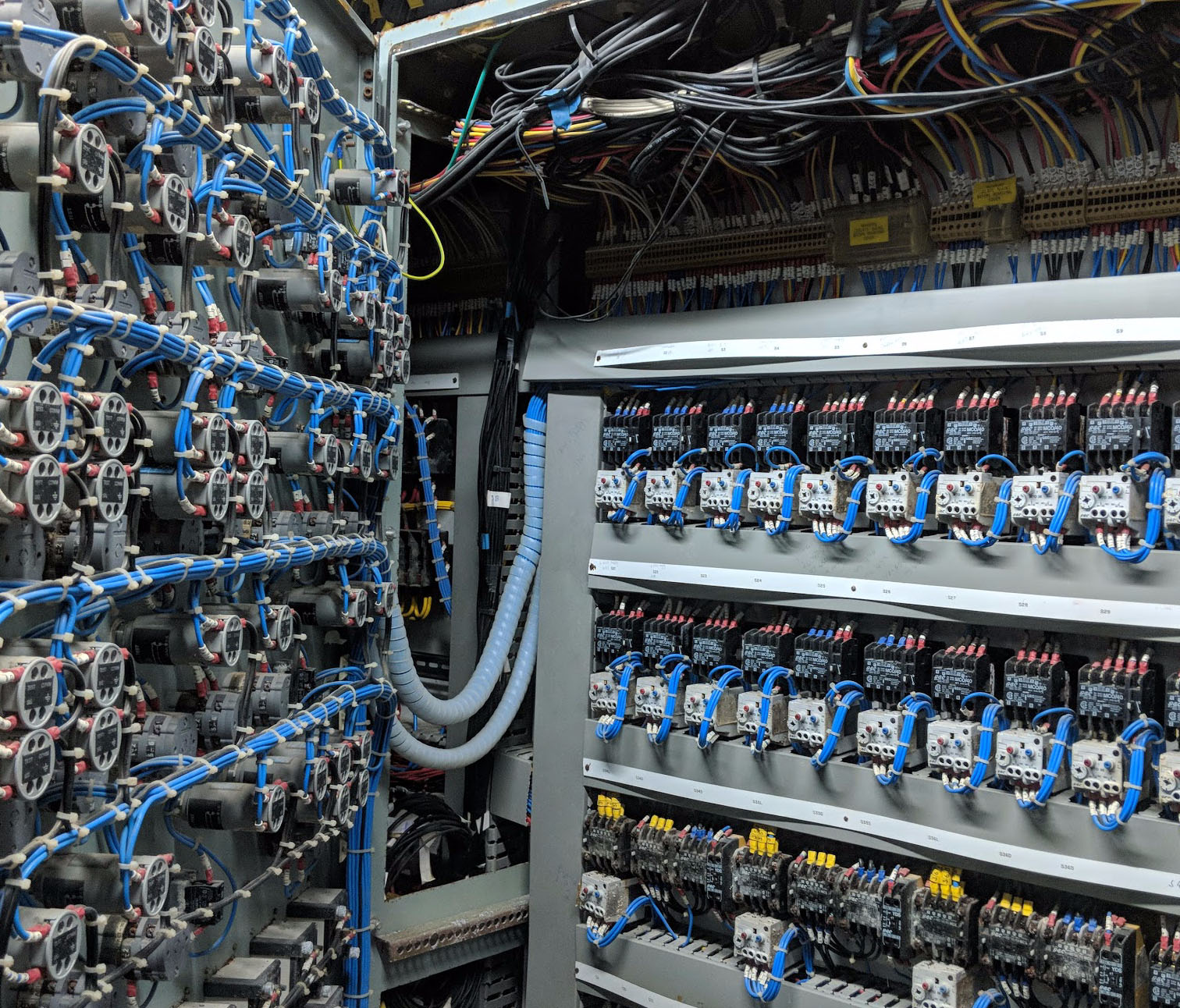

Hardware

The hardware that underpins Sausage Cloud is a set of ancient-yet-spritely HP BL460c G6 Blades. I say spritely because they’ve got a pair of mirrored 1TB disks sat in each one, which makes I/O just about bearable. The generation of Xeon that lies within (E5520) is power hungry and sadly too old for testing some nascent virtualisation technologies - specifically Kata and Firecracker - which is a shame because a number of us would like to play around with that stuff some more.

Networking is provided by a single Cisco 3750G 48-port switch, and we use a couple of passthrough looms in the Blade chassis itself to present a pair of 1GbE interfaces to each Blade. We’ve got VLANs defined in order to segregate each class of traffic, which broadly boils down to:

- Management

- Internet

- Overlay

- Out-of-band

You’ll notice there’s no dedicated VLAN for storage traffic, more on that a little later.

Routing and basic perimeter security is managed by a weedy Juniper SRX210.

For managing out-of-band (consoles etc.) we have to go via the HP BladeSystem’s “onboard administrator” which is a creaky old thing, and remains just about serviceable with the latest firmware applied. It only renders properly in Firefox though for some reason, and the virtual console stuff is only supported via a Java option 🤮. I keep a dedicated VM around on my laptop for exactly this sort of thing.

Deployment and automation

To manage the baremetal deployment of the cloud infrastructure we use Canonical’s MAAS. It does just enough of the basics in a reasonably sensible fashion to justify its existence, and it’s deployed to a dedicated Blade. I hate that this is a SPOF; All too often administrators overlook the importance of such core infrastructure, and we’re guilty here. We all have our reasons.

This Blade also has a checkout of the Kolla-Ansible source code repository along with a corresponding Python virtualenv, and this is what’s used to deploy and configure the OpenStack deployment itself entirely in Docker containers from just 46 lines of configuration:

$ grep -o '^[^#]*' globals.yml | wc -l

46

And I’m sure there’s some redundant junk in there! Let that sink in for a minute as you gaze upon this expanse of blog. I can’t overstate how fantastic the Kolla project is, and why - even if you’re not interested in OpenStack - you should take a look. It’s borne from operator experience and it’s comprehensive enough to cater for the majority of use-cases. If you can’t see it covering your use-case, chances are you’re thinking about Doing It Wrong™️.

There’s a little PC Engines APU2 sat on one side which provides secure connectivity into the platform, with VPN access facilitated by Wireguard.

Controllers

Two of the ten Blades have been designated our control nodes. These run all of the core OpenStack API services, workers, and supporting junk such as memcached, Galera and RabbitMQ. The observant amongst you will have probably spat out your drink at the insane notion of running a two node Galera cluster, let alone RabbitMQ, but that’s exactly what I’ve done here, simply because I didn’t want to ‘waste’ another node that could be otherwise used for compute workloads. It’s possible to run a service called Galera Arbitrator that doesn’t persist any data and which just participates in cluster quorum election but I gave that a miss because it’s not currently supported by Kolla. Instead I decided to YOLO my way through the deployment and just run with two nodes. To be honest, this isn’t quite as big of a deal for a couple of reasons:

Firstly, our cloud is so small such that it doesn’t really see all that much change in the environment. I can take a backup of Galera on a daily basis and that’s sufficient to be able to restore in the event of data loss. Secondly, remember that everything is wired into a single switch anyway - so partitioning is a lot less likely.

Finally we’re accountable to noone but ourselves because this is a hobby project, but at the same time we’re proud enough to care - enough to give it sufficient thought to weigh it up anyway.

Compute

There are five blades allocated for the task of running virtualised workloads. There’s not much to say about these - they pretty much get on with doing their job. I’ve been side-eyeing one of them recently though - compute5 - with some suspicion as workloads just don’t seem happy on there, but it keeps plodding along.

Networking

I’ve mentioned the physical networking aspects already. The virtual networking is taken care of by one or two lines in Kolla’s configuration (haha remember the good times we had with discovering how to do this ourselves with Puppet and Open vSwitch and oh why am I crying please hold me it’s dark also the pain), and this handles deploying Neutron and using VXLAN to tunnel overlay for the virtually segregated tenant traffic. Just a couple of calls post-deployment (as a one-off task) to the Neutron API are required to define our provider network, specify a range of publicly-accessible IP addresses which can be allocated as floating IPs or used to router gateways, and we’re done.

Our two (lol) designated controllers also happen to run double-duty as network nodes, handling layer-3 traffic ingress and egress for all tenant traffic. We make use of VLAN tagged interfaces to ensure the appropriate segregation, otherwise everything works as it should. OpenStack components for the APIs and for network routing are configured for ‘high availability’, meaning that if we do lose one controller, the other one will assume responsibility for routing traffic in and out of whereever it needs to go. Behind the scenes this is made possible by keepalived.

As far as usage goes, the network is presented much like other public OpenStack cloud platforms and indeed AWS. You get the ability to create your own private networks, subnets and routers, and you can set a public (gateway) IP on a router in order to be able to ingress and egress traffic from the Internet. Virtual machines get private IP addresses assigned, and you can allocate floating IPs from our small pool of public IPv4 IPs. These are our scarecest, most precious resource, and I think after tackling the persistent storage we’d look to IPv6 instead.

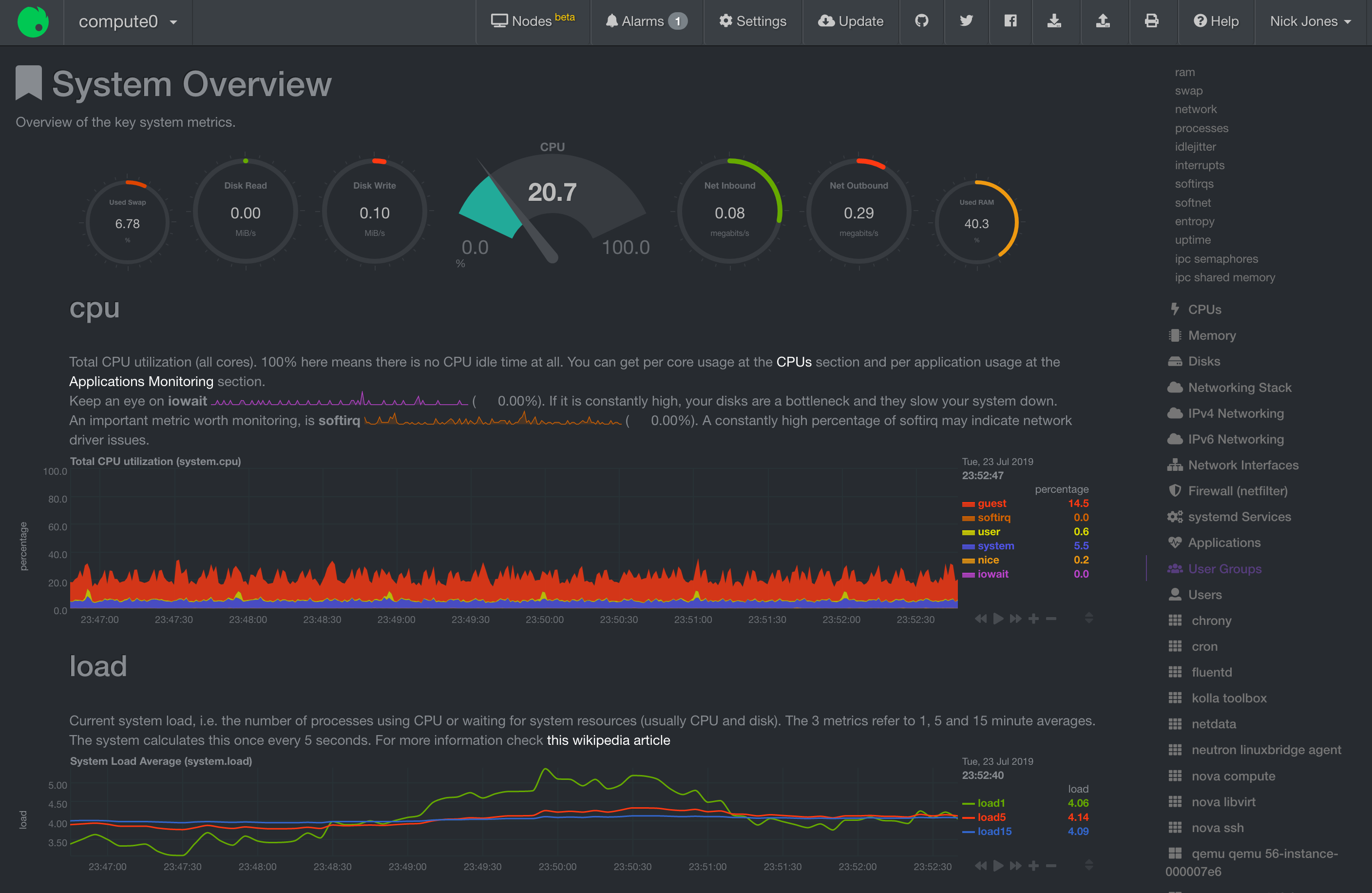

Monitoring

Much like DNS, monitoring is ever the misunderstood afterthought. And again, much like DNS, fatally so. Luckily we have some monitoring! What are we, hobbyist amateurs?! 😉

We collect all logs via fluentd, parse them via Logstash, send them on their merry way to Elasticsearch, and then scratch our heads over the Lucene query syntax using Kibana. We’ve also deployed Netdata; It seemed like a quick win at the time, but it offers up a lot of metrics with no easy way to aggregate or do anything especially useful other than a bit of ad-hoc analysis. It’s been good enough, but as soon as I have the time this is the area in which I’d like to pay some serious attention.

Usage

Most of the platform’s usage has centred around people being able to spin up a handful of VMs with a decent amount of memory allocated - 16 or 32GB per instance isn’t uncommon. This fits the pattern of being able to have a persistent, remote virtual environment in which to develop and test without having to worry about crazy bills. The flexibility of the per-tenant networking means that you can replicate some funcionality that would be otherwise awkward to do so using ‘desktop’ virtualisation software. The CPU performance seems to matter a lot less for this sort of thing. In short, it’s a useful platform for those of us interested in developing and testing other cloud platforms. For example, I made use of it extensively when pulling together some bits of Terraform to get DC/OS to install via the Universal Installer.

What’s missing

Even though it’s such a budget deployment, the platform itself presents and supports a decent selection of services. Apart from all the base stuff required to run and manage virtual compute and networking, there’s some additional supporting components that can keep our users well away from spits virtual machines. If you want to deploy Kubernetes, we have Magnum for that. If it’s good enough for CERN’s crazy amount of clusters, then it’s good enough for you. We also have some niceties such as Designate for DNS-as-a-Service.

However, there is one glaring omission - I mean apart from the general infrastructure horror show: Persistent storage. Right now you’re limited to ephemeral, which generally is fine; Your virtual machine’s storage is local to the hypervisor (meaning it’s on an SSD), and it’s only ephemeral insofar as the VM itself. For most of us right now this is more than good enough, but it would be nice to be able to mount software-define block storage volumes on demand, so we’re working towards plugging that gap with Ceph.

Closing thoughts

Reading the above, you’d be forgiven for thinking that this sounds like a recipe for disaster. A painful exercise in learning what it takes to run a complex IaaS. After all, OpenStack is hard right? Surprisingly enough, no. I mean, I live in daily dread of something happening which necessitates a trip to the Bunker (thankfully now not all that far away) and which means myself and Matt have to get our hands in our pockets, but honestly it’s required very little involvement on our behalfs to keep it ticking over. In the time since it’s been deployed, it’s seen a fair amount of usage with over 2418 virtual machines popping into and out of existence, and I’ve had comments from people about the stability of their IRC bouncer running on a VM with 32GB of RAM.

If that’s not validation then I don’t know what is.

To close things out, here’s a picture of Matt after a job well done: