Tales from the Sausage Factory

15 January, 2023It’s been far too long since the last Sausage Cloud update, and a lot has happened that warrants an update and some news on what we’ve been up to. The platform itself is now much, much more resilient and performant than it was previously, and this is in no small part thanks to a few special folks and companies that have helped out on the hardware front.

Infrastructure

Networking

Since Sausage Cloud was first installed we’ve limped along with 1GbE, which has been mostly fine since the networking that most folks care about is between their instance and the Internet. However, there was no way this was going to cut it if we were to provide network-attached storage, so the hunt began for a reasonably priced (i.e) free 10GbE switch with a sufficient number of ports to service all Blades plus whatever storage nodes we hung off the back.

When fellow Sausage enthusiast Bartosz mentioned that he had a couple of ancient (but perfectly serviceable) Juniper EX4500s going spare, we jumped at the chance to make use of them. They eventually made their way up to my flat in Edinburgh (via an eery early lockdown service station rendez-vous with Danny) where we updated them to the latest firmware prior to making the trip to the Bunker. And if there’s only one adjective I had to use to describe these things, it’s “loud”.

Eventually we were able to get one of these switches plumbed in and everything recabled - we followed the approach established with the 1GbE and used a couple of upgraded passthrough modules, and this piece of work paved the way for the next round of upgrades.

Storage

Perhaps the single biggest upgrade that Sausage Cloud has received during lockdown happened thanks to the awesome folks over at SoftIron, in particular Danny. The lack of a fast, reliable persistent storage solution was fine at first but as usage has grown it started to become more and more of a blocker for certain types of workloads. Whilst we could’ve cobbled something together in classic Sausage style, fundamental infrastructure that underpins your platform - networking, storage - is not usually something you want to do too shitty of a job on, even by our standards. We’re also very much power constrained, and so of course the combination of reliable (since it’s a trek to the Bunker, even for me), low-power and yet high-performance distributed storage requirements (“pick two”) meant that we’d have to spend a fair old chunk of change to implement. Way beyond the hobbyist or community funding that we ostensibly have.

After a few chats, SoftIron came to our rescue and offered to fit us up with their HyperDrive Ceph storage solution, with enough capacity and redundancy to more than meet our meagre requirements. A couple of months later and everything was lined up, ready to go. However, there was no point in getting the storage in until we got the networking upgraded from 1GbE to 10GbE, and that was no small undertaking, so the kit sat in the Bunker gathering dust for the best part of a year before we performed the aforementioned network upgrades. The stars also had to align for Danny to make the long trek up and spend the day doing the installation. Finally they did and so in mid-2021 we were able to get the SoftIron kit racked, cabled and provisioned in a day (!).

Compute

The “ancient-yet-spritely” Blades we were using previously weren’t doing us any favours in terms of power, and as time has worn on that spriteliness was becoming less and less true. So we’ve invested in some (OK, second-hand) G9 Blades with much newer CPUs, quicker memory, and faster local SSDs. This has allowed us to consolidate workloads without sacrificing performance while at the same time lowering our overall power usage.

Platform

Rest assured, the software that powers Sausage Cloud hasn’t been neglected either. The OpenStack control plane is now properly redundant with three nodes in the cluster, and on the software side we’ve gone through a couple of rounds of updates to OpenStack, and we’re now on Yoga with an update to Zed planned soon.

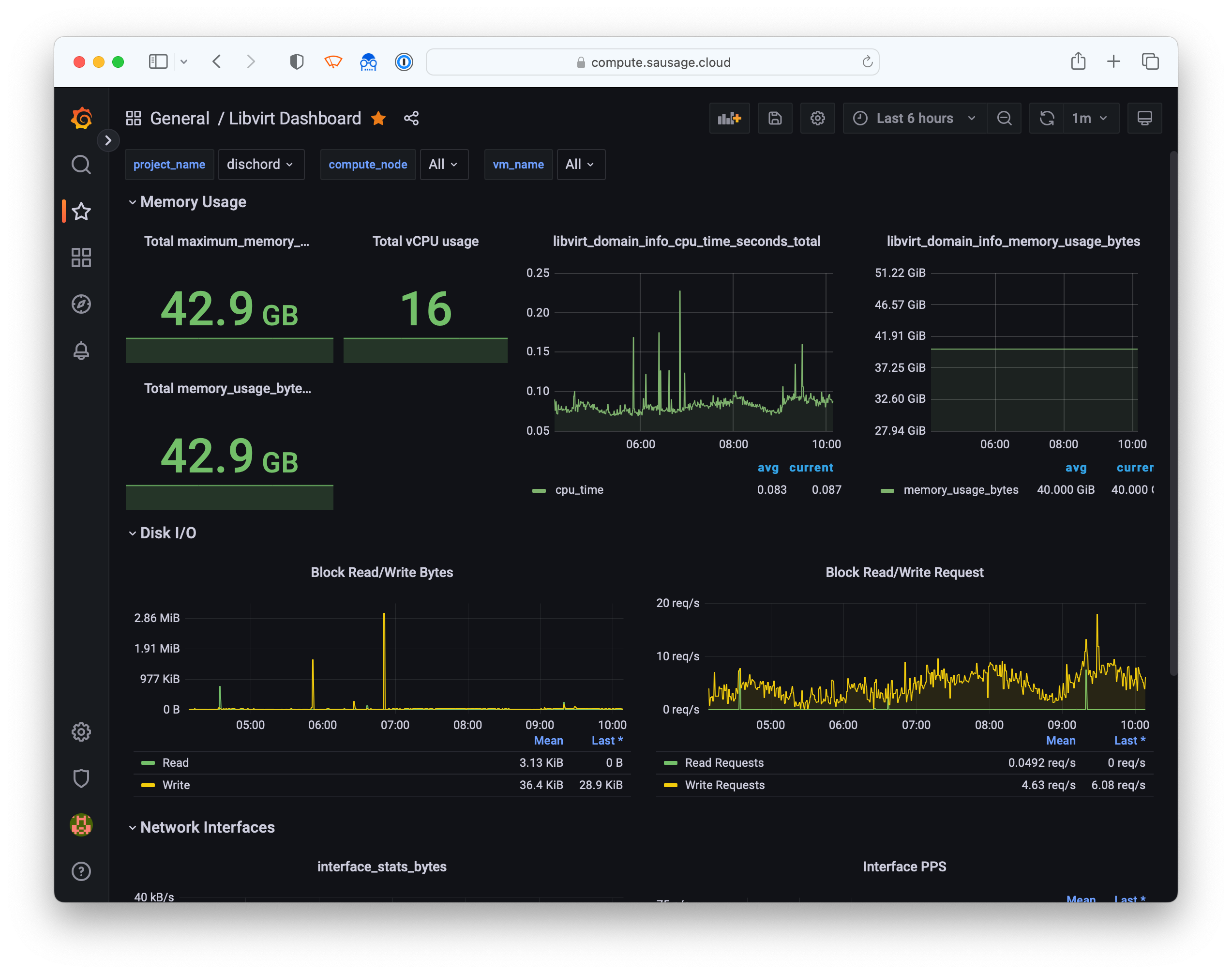

We’re also doing a much better job of monitoring! Previously we’d cobbled together something with Netdata which worked OK, but for a while now K-A has provided comprehensive roles for installing Prometheus plus the various exporters and so we’ve gone with that plus some shiny new dashboards in Grafana to give us much better visibility and awareness of what’s going on and where.

What’s next?

We’d be lying if we said that the recent energy crisis hadn’t hit us hard - it has. Very much so. Our running costs are now nearly four times what they were which is absolutely insane. Luckily everyone’s chipping in extra to cover this increase which is a testament to the usefulness of something that started off as a hobby project.

If you think you’d like to get involved and make use of our platform then feel free to get in touch!